Kainos and AI: The AI NI Hackathon; How We Won It, What We Built and Why Hackathons Matter

Kainos and AI: The AI NI Hackathon; How We Won It, What We Built and Why Hackathons Matter

'Networking the Northern Ireland AI community is the core mandate that drives AI NI. This will be achieved through organising events that target a wide range of demographics that include companies, students and academia.'

- AI NI?�?

On the 13th and 14th of April, AI NI held their 'Good AIdea Hackathon'. The theme of the event was 'AI for Good', and it turned out to be the largest hackathon ever run in Northern Ireland with over 200 attendees taking part across two locations.

My Team

As a member of the Kainos AI Practice, I felt like this hackathon would be a good chance for me to show off and improve my skills and knowledge in AI. My team consisted of myself and the other software engineers from the AI Practice. We decided beforehand that we were going to enter this hackathon as a team.

Challenges

There were three challenges that attendees of the hackathon were able to take part in:

1: The Peltarion Challenge. This involved taking data from hearing aids and using AI to help improve them. The example idea given was to help allow the hearing aids to eliminate sound coming from more than one source using AI to detect different voices and background noise.

2. The BazaarVoice Challenge. This involved taking reviews, both legit and bot-generated, and trying to build an AI model that could tell the difference between them. This is the challenge that my team decided to take on.

3. The AI NI Challenge. This was a challenge for any other ideas that the teams had that fell under the banner of 'AI for Good'.

Our Idea

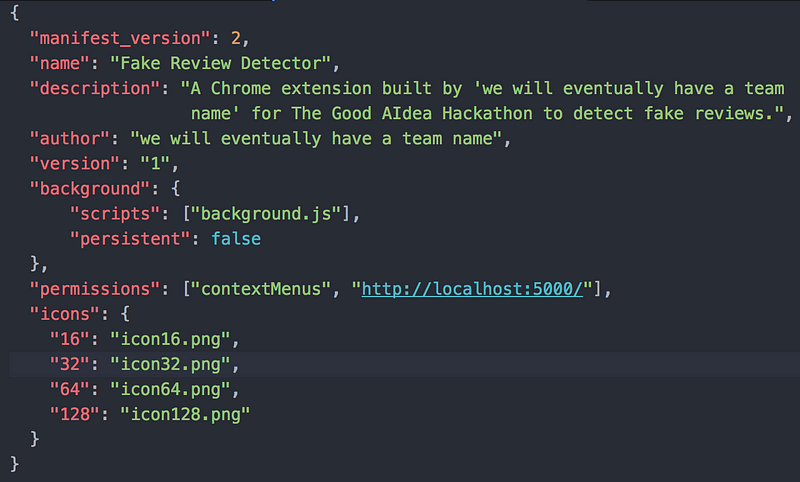

As previously mentioned, our team took on the BazaarVoice challenge, but we also had a mindset focused on how we could take that and use it as an 'AI for good' in order to also fulfil the criteria of the AI NI challenge, which gave us the potential idea of using something like this to detect cyber bullying in tweets. This manifested in the idea of a Google Chrome extension to help users detect fake reviews by allowing users to select a piece of text, for example a review for a product on Amazon, and then this would be run through a classification algorithm and classified as either 'real' or 'fake' and this would be shown to the user. We also decided to build a webapp with the same functionality to ensure that users who aren't on Google Chrome would be able to benefit from the use of our project. This would connect to the same API as the Chrome extension (more on that later).

We had a few challenges and obstacles to overcome to make this project a reality. First of all, accuracy is a big deal when it comes to AI and so we had to build our project to have the highest accuracy possible (which ended up being 95.75%). This is important as it cuts down on the number of falsely flagged reviews (i.e. false positives and false negatives). Additionally, we didn't really have much experience in building Chrome extensions, so this was something that we would have to learn. We also had to set up a repository, branches and automated testing.

Goodies and Perks

We were given a T-shirt as a thank you for attending the event. We were also given free food and drinks for the weekend, as well as water bottles and AWS credits.

Day 1??? ??Development

Day 1??? ??Development

We arrived at the hackathon between 9 and 10 AM on Saturday. Once all the attendees had arrived, we were taken into the lecture theatre where we were briefed about all of the events that would take place over the weekend, the challenges that we would be taking part in, and the prizes that the winners would be receiving. Workshops were given based around data science, text classification and pitching.

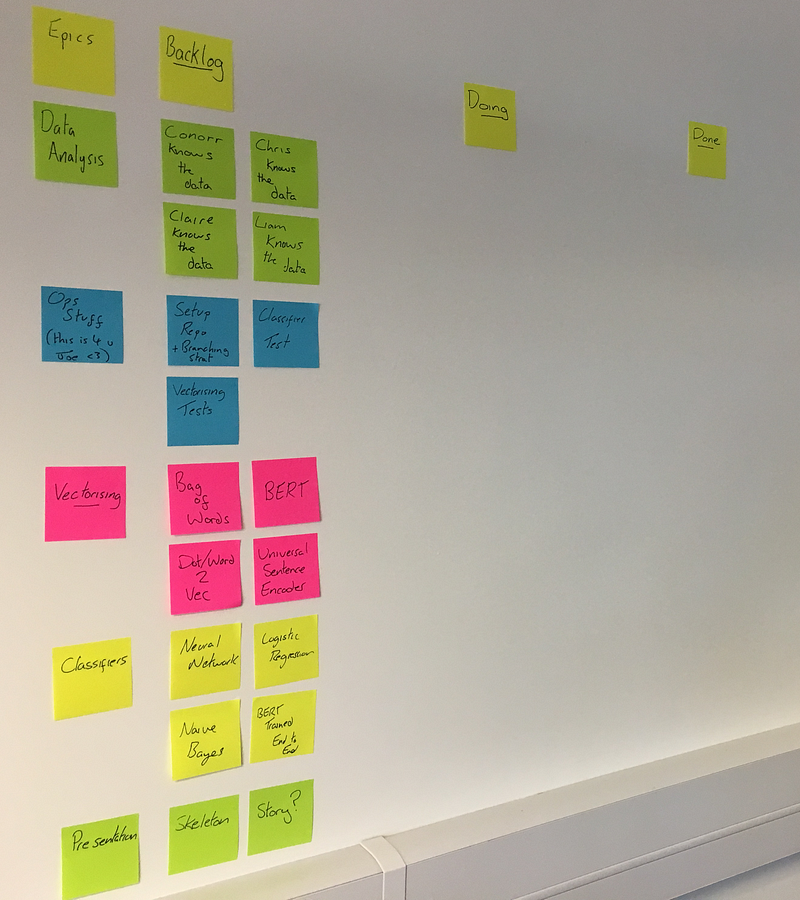

After this, we got back to our room and we started to work on our project. We initially started by planning out what we would do and setting up a Kanban board to be able to track what had been done, what was currently being done, and what needed to be done. This gave us a good idea of all the work that we needed to put into our project.

We found that using a 'Bag of Words' vectoriser along with a 'Neural Network' classifier gave us the best results so that's the combination we decided to use.

Our team put an emphasis on working under good data science principles, in a measured way that put a process around how we write and review different AI models and components. In addition to the three classifiers and vectorisers that we had put to the test, we also ran a test on BERT, which is a Google-developed deep learning natural language framework built specifically to be able to tackle any natural language machine learning problem. Due to BERT's low level implementation and the time it takes to be trained, we did not integrate our BERT model into the full system and instead built that with a simpler model that we were able to validate much quicker.

Meanwhile, we also had to take frontend development into consideration. As previously mentioned, we decided to build a Chrome extension and a webapp. I took on the responsibility of leading the dev work on these frontend implementations. Having never worked with Chrome extensions before, I decided to read through some tutorials in order to make sure I understood how they worked, then got started building ours.

Day 2??? ??Pitching

On the Sunday morning, the only thing we had to do in terms of development was to finalise the API and tie everything together.

There were a number of spot prizes, as well as the main prizes which went to:

3rd Place??? ??DiverseCV, which is a system to remove the potential of both intentional and unintentional bias by redacting sensitive information from applicants' CVs before the employer can view it to pass judgement.

2nd Place??? ??Team Blackball, who created a system to help teach users spell their names in British Sign Language using image detection.

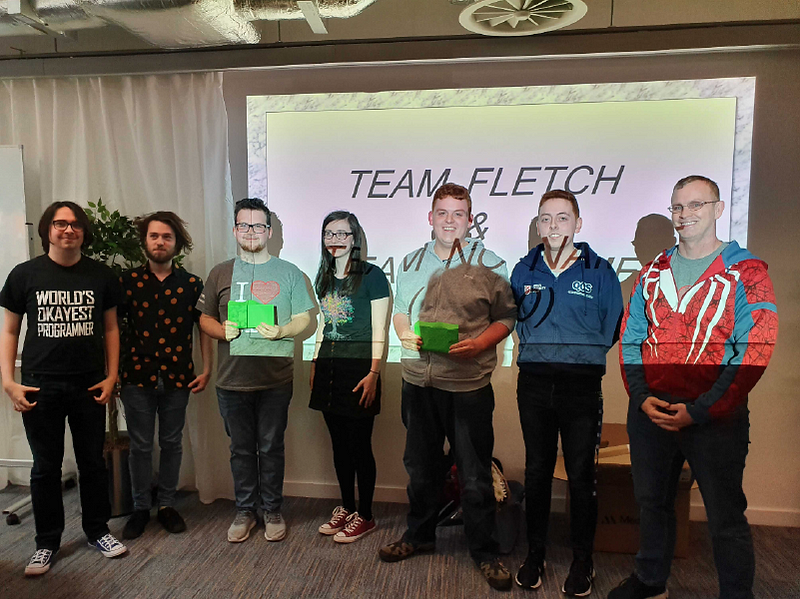

1st Place??? ??Tied between Team Fletch and my team, who both created systems for telling fake and real reviews apart.

Why Hackathons Matter

This leads me on to my final point of this article, why hackathons actually matter.

For me, the time constraints put on attendees of hackathons means that they will have to focus on the most important things and not get bogged down on small details. It encourages a way of thinking through problems to find and implement a solution in the fastest time possible. It builds confidence in the abilities of developer and enables them to learn and improve their skills in a way that normal development simply can't replicate. From my own perspective, I believe my skills and confidence surrounding AI have improved as a result of this hackathon, and I hope to be able to use and improve these skills in work projects and in further hackathons.